If you ever find yourself in a position where you need to remove duplicate entries on a MongoDB collection, as per version 3.0 you will need to use Aggregation . This might seem a bit confusing because in SQL you only need to use a simple “Group By”, which will display the data fields grouped by certain column like in the following example:

Select * From users;

| ID | Name | Type |

|—-|———–|——–|

| 1 | Doug | Dog |

| 2 | Snowball | Cat |

| 3 | Mabel | Dog |

| 4 | Drogo | Dragon |

See how we have 2 dogs, 1 cat and 1 Dragon, suppose you want to count how many animals of each type we have at the zoo, in SQL we can use ‘Group by’ in the following way;

Select count(type) as Amount, type as Type From users Group By type;

| Amount | Type |

|——–|——–|

| 2 | Dog |

| 1 | Cat |

| 1 | Dragon |

This is how you are probably accustomed to seeing a visualization of the data from a SQL query. However, to obtain the same results in MongoDB, you would have to use the ‘aggregate’ function to obtain the same result set.

It’s important to note that MongoDB is a No-SQL database that stores objects in a binary format called BSON , think of it as loosely based on the popular JSON. MongoDB is a distributed database meaning that high availability, horizontal scaling, and geographic distribution are built in and easy to use. Instead of the usual relational data on a SQL database. Mongo is Schema-less, so you’re not confined or limited to any structure on your collection for example:

db.Users.find({});

{

id: “asd123123sadfas”,

name: “Jose”,

lastname: “bonfil”

}

On a normal SQL database we’re limited by a schema, I’ll use the example below to demonstrate a key difference :

| Id | Name | Last Name |

|—-|———-|———–|

| 1 | Jose | Bonfil |

| 2 | Eduardo | Evia |

| 3 | Jon | Doe |

While this seems to be perfect, the problem here is if you want to add a new property for a specific record (for example ‘active’), it would mean adding this to every record even if we don’t really need this property on other records which would fill our database with unnecessary data or risk complicating future result sets. But on No-SQL we can simply add the property to the records we needed like this:

Let’s start by inserting the first document with the code below:

db.Users.insert({

name: “Jose”,

lastname: “bonfil”

});

The _id will be auto inserted by MongoDB, we can make some modifications as to the length of the id and also other properties or even insert one of our own but this must be unique to avoid issues. As we’re not attached to any fixed schema we can use a different structure in the next insert:

db.Users.insert({

name: “Jose”,

lastname: “bonfil”,

active: true

});

We can also insert multiples:

db.Users.insert([

{

name: “Jose”,

lastname: “bonfil”

},

{

name: “Jose”,

lastname: “bonfil”,

active: true

}

]);

If we use the find function we can get the documents on a collection:

db.Users.find({});

{

_id: “asd123123sadfas”,

name: “Jose”,

lastname: “bonfil”

},

{

_id: “ab1929292999av”,

name: “Eduardo”,

lastname: “Evia”,

active: true

}

We don’t need to follow the same structure on all the fields, however this could lead to complications with large data sets, so try to keep a firm handle on this.

Using Aggregate Command

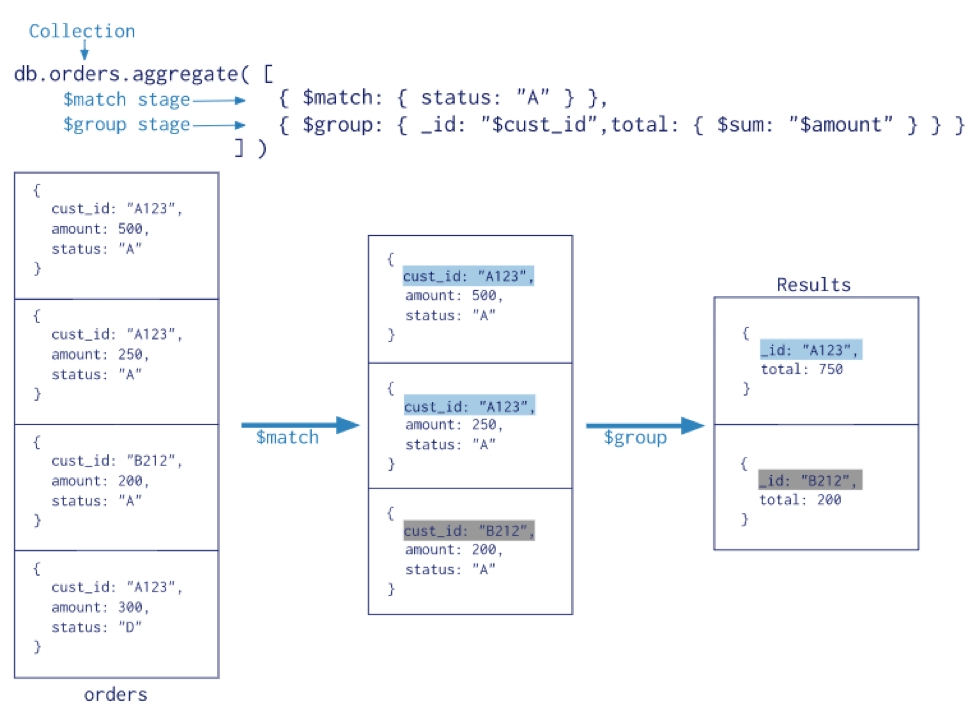

You can find duplicate values within your MongoDB database using the aggregate method along with the $group and $match aggregation pipeline operators.

db.Users.aggegrate([ … ]);

Aggregation operations process data records and return computed results. Aggregation operations group values from multiple documents together, and can perform a variety of operations on the grouped data to return a single result. MongoDB provides three ways to perform aggregation: the aggregation pipeline, the map-reduce function, and single purpose aggregation methods.

Aggregation Pipeline

MongoDB’s (No-SQL) aggregation framework is modeled on the concept of data processing pipelines. Documents enter a multi-stage pipeline that transforms the documents into an aggregated result.

The most basic pipeline stages provide filters that operate like queries and document transformations that modify the form of the output document.

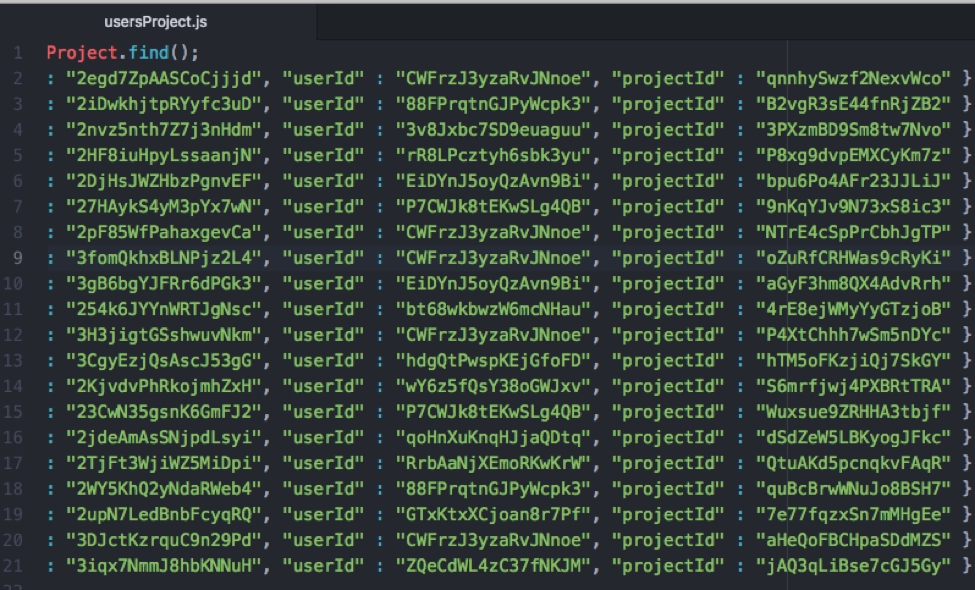

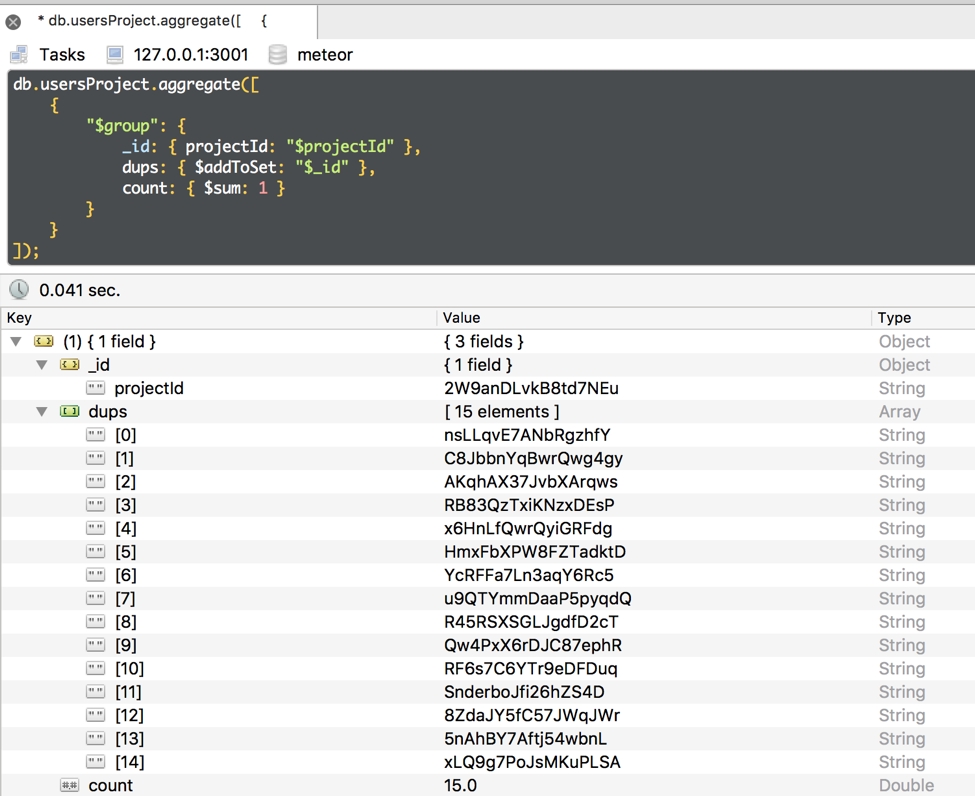

We’lll use the usersProjects collection for this example, we can see the structure in the image bellow:

Picture this being the “schema” on our collection, let’s expand a bit on this:

usersProject

{

_id: “2egd7ZpAASCoCjjjd”, // The id of this document.

userId: “CWFrzJ3yzaRvJNnoe”, // The user id, this is related to another collection.

projectId: “qnnhySwzf2NexvWco” // The project id which is related to another collection.

}

We can use the Aggregate function to find duplicate entries, make some groupings, and other queries very useful:

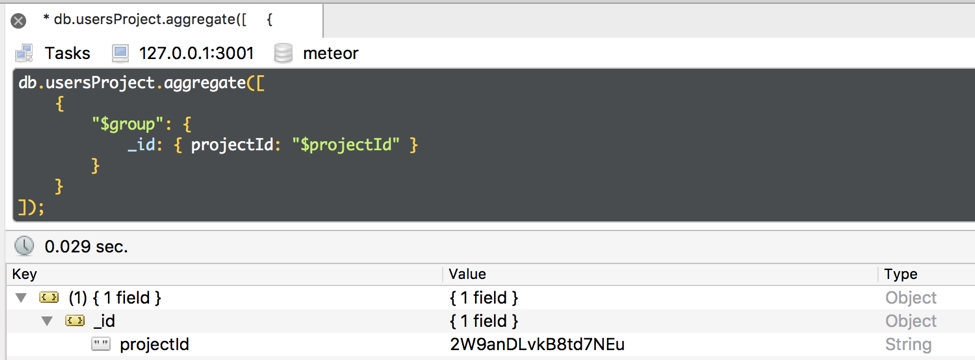

db.usersProject.aggregrate([

{

$group: {

_id: { projectId: “$projectId” }

}

}

]);

The $group operator needs an id on which he will make the grouping and the projectId is the name of the field with the content on $projectId we need only one project per user.

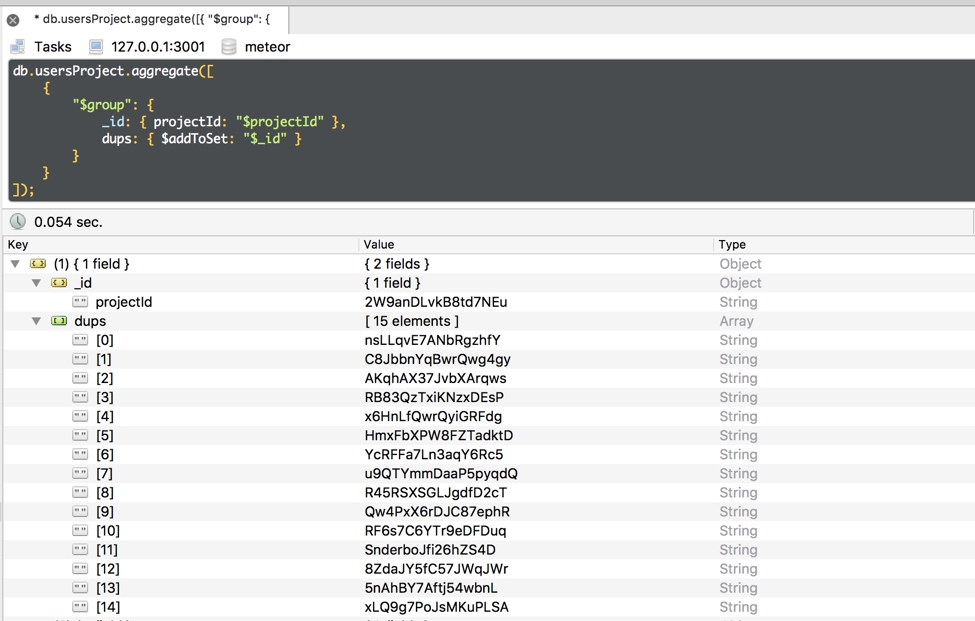

Now we need another field to check the duplicate entries we can do this with another parameter

db.usersProject.aggregrate([

{

$group: {

_id: { projectId: “$projectId” },

dups: { $addToSet: “$_id” },

}

}

]);

Note: using Robo 3T

Now, stop and check to see which groupings have more than one unique ObjectId and figure out which document we want to keep. However, imagine if we had hundreds or thousands of documents. It would become very tedious to look for all of the groupings with more than one unique ObjectId. So, let’s make it a little easier by including another field in our $group pipeline operator that will count the documents.

The count field uses the $sum operator which adds the expression 1 to the total for this group for each document in the group. When used in the $group stage, $sum returns the collective sum of all the numeric values that result from applying a specified expression to each document in a group of documents that share the same group by key.

For our example, the aggregation will be as you can see below:

db.usersProject.aggregrate([

{

$group: {

_id: { projectId: “$projectId” },

dups: { $addToSet: “$_id” },

count: { $sum: 1 }

}

}

]);

To get only the groups that have a count of more than one, we can use the $match operator to filter our results. Within the $match pipeline operator, we’ll tell it to look at the count field and tell it to look for counts greater than one using the $gt operator representing “greater than” and the number 1. This is what that looks like:

db.usersProject.aggregate([

{

$group:

{

_id: { projectId: "$projectId" },

dups: { $addToSet: "$_id" },

count: { $sum:1 }

}

},

{

$match:

{

count: {"$gt": 1}

}

}

]);

Now that we have the data that we need to delete, so we will iterate over the results with a forEach and then pass a parameter will be the item by item on the iteration.

db.usersProject.aggregate([

{

"$group": {

_id: {projectId: "$projectId"},

dups: { $addToSet: "$_id" } ,

count: { $sum : 1 }

}

},

{

"$match": {

count: { "$gt": 1 }

}

}

]).forEach(function(doc) {

doc.dups.shift();

db.usersProject.remove({

_id: {$in: doc.dups}

});

})

Using JavaScript chaining functions we can add the forEach and then shift the first element which will be not deleted on the collection, we can then use the remove() function to erase element by element, passing the id as a parameter in a remove operation on our collection.

This will clean our collection of the repeated items, also take into consideration the execution time and performance, you could potentially use this to create more logic combined with aggregation. If you want to take a look at other, possibly more advanced, options for aggregation checkout the official MongoDb documentation :